Delivering a reliable rate-limiting service is key to protecting backend systems and enforcing usage policies. Below is a straightforward, production-ready design that balances performance, accuracy, and operational simplicity.

1. Goals & Requirements

Functional

Enforce per-key quotas (e.g. per user or API client)

Allow controlled bursts and steady rates

Support dynamic updates to rate rules

Non-Functional

Handle millions of requests per second

Keep p99 latency under 50 ms

Scale horizontally across availability zones

Survive node- and zone-level failures

Maintain 60–70% average utilization, with autoscaling

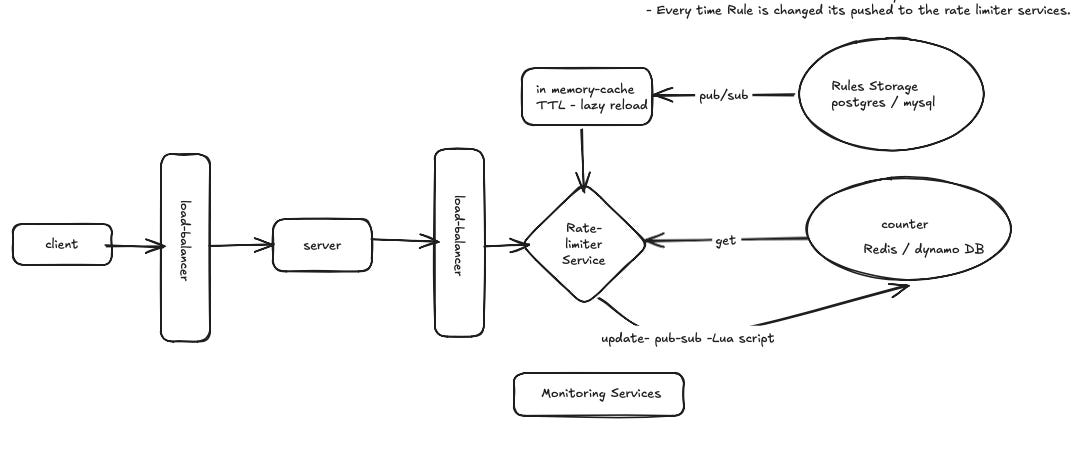

2. High-Level Architecture

Load Balancer

Routes each client to a consistent limiter node, enabling cache locality.Rate Limiter Nodes

In-memory cache of rate rules, updated via Pub/Sub.

On each request: fetch rule → invoke Redis Lua script → return allow or reject.

Emit metrics (latency, rejects, cache hits).

Redis Cluster

Sharded by key-hash (e.g. 128 shards × 3 replicas).

Stores minimal per-key state (token counts or window counters).

Scripts perform refill, check, and consume in one atomic call.

Pub/Sub Stream

Publishes rule changes (limit, burst size).

Nodes subscribe and refresh cache entries by version or timestamp.

3. Counter Storage & Sharding

Raw footprint:

1 billion keys × 1 KB each = 1 × 10¹² bytes ≈ 1 TBOptimized:

Pack key (8 B) + counter (8 B) → 16 B/entry → ~16 GB rawReplication: 3× → ~48 GB per region

Shard plan:

128 logical shards → ~125 million keys/shard → ~2 GB/shard

Deploy ~4 shards per node → ~32 nodes total

4. Core Algorithms

| Fixed-Window Counter | O(1) per window | No | ±window size error |

| Sliding-Window Log | O(R) timestamps | Yes | Exact |

| Sliding-Window Counter | O(1) | Approximate | Bounded error |

| Token-Bucket | O(1) (2 fields)| Yes (≤ bucket size)| Smooth |

| Leaky-Bucket | Queue length | No (queue delays) | Depends on queue |

Recommended: Token-Bucket or Sliding-Window Counter with a single Redis Lua script for atomic refill, check, and consume.

5. Atomic Request Flow

Lookup rule in local cache.

Redis Lua script:

Refill tokens or rotate counters based on timestamp

Compare against limit

Decrement and return result in one call

Response: HTTP 200 (allow) or 429 (reject) with headers:

X-RateLimit-LimitX-RateLimit-RemainingX-RateLimit-Reset

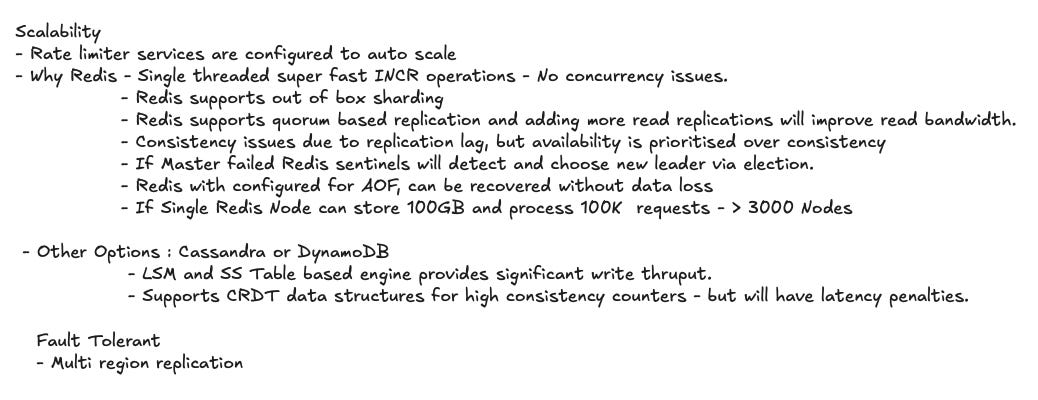

6. Availability & Scaling

Masterless data store: Redis Cluster or DynamoDB global tables for zero-downtime failover.

Multi-AZ replicas: each shard spans ≥ 3 zones.

Client retries: exponential backoff + configurable “fail-open” for noncritical paths.

Autoscaling: trigger on p99 latency or reject-rate thresholds.

7. Observability & Alerts

Key metrics:

Reject rate (%)

p50/p95/p99 latency

Cache hit ratio

Pub/Sub lag for rule updates

Alerts:

Reject rate > 1% sustained for 5 minutes

p99 latency > 50 ms

Pub/Sub lag > 1 s